Hi, I’m Mahanthesh

Here’s what you should know about me.

As a Master’s student at Cleveland State University specializing in Computer Science, my journey is rooted in innovation and a desire to create impactful solutions. My research is dedicated to improving the lives of disabled individuals by tackling one of their most challenging and high-priority tasks: feeding.

At the Center for Human-Machine Systems Lab, led by Eric Schearer, I am developing Robot Assisted Feeding systems that integrate robotics with artificial intelligence. This project is not just about technology; it's about empathy and enhancing autonomy for those who face daily struggles with simple tasks.

In addition to my primary focus, I have delved into haptics, tactile sensing, and actuators for haptic feedback gloves, exploring their applications in augmented reality, virtual reality, and robotics. My commitment to innovation has allowed me to pitch this projects at various university competitions, where I've successfully secured a total of $30,000 in grant funds.

RESEARCH

ROBOT ASSISTED FEEDING

There are over 1 billion people that live with motor impairments and United state alone has about 1 million individuals who needs assistance with feeding. Our solutions, Robot Assisted feeding helps individuals feed themselves without any assistance.

Using voice recognition technology to receive commands, combined with Vision Language Models (VLM) for ground truth verification, our system leverages zero-shot learning visual models to accurately detect food items. Drawing from our comprehensive library of skill sets, the robot effectively grasps the food and delivers it directly to the user.

Designed and implemented a 3D pose-based visual servoing system that enables real-time tracking and dynamic interaction with a red ball. The system utilizes computer vision techniques to estimate the ball's position and orientation in 3D space, allowing a robotic arm or camera to adjust its movements accordingly. By continuously analyzing the object's pose, the servoing mechanism ensures precise and adaptive control, making it suitable for applications in object tracking, robotic manipulation, and autonomous navigation.

3D VISUAL SERVOING

PROJECTS

Robot Foundation Model using Gemini 2.0 on a Humanoid Robot

UCLA Hackathon - Google 2nd Prize

Our humanoid robot executes complex, multi-step everyday tasks through advanced spatial understanding and reasoning capabilities. When instructed to "clean up the table" or "sort kitchen items," it intelligently distinguishes between trash and valuable objects, properly disposing of waste while organizing items that should be preserved. The robot demonstrates contextual intelligence by selecting appropriate cleaning tools—knowing when a situation calls for a paper towel versus a brush. This practical reasoning enables it to handle varied household scenarios without specific programming for each task variation, making it truly useful for daily living assistance. Read More

Haptic Feedback Gloves for AR/VR

Secured $30,000 Grant Funds from different pitch competitions

Xtangible - A tactile response system in glove form for VR and AR applications. These gloves replicate physical sensations including touching, grasping, and gentle pushing. The temperature functionality creates realistic warm or cool sensations against your palm. The gloves connect wirelessly to the headset with minimal latency, delivering the most immersive and realistic VR/AR experience available.

Mobile Manipulator Robot for Self-Feeding

Carnegie Mellon Hackathon - 2nd Grand Prize

6DoF robotic arm mounted on a TurtleBot which is controlled by an onboard intel NUC running ROS2. The depth camera streams the RGB and depth data to a VLM which acts as the brains for the autonomous navigation and with the power of computer vision and deep learning, we detect the facial points for mouth open and close, which in turn triggers the arm to start the feeding process.

LeRobot Tele-operated using Meta Quest 2

Using meta quest 2 to tele-operate two SO101 follower arms to collect robot data.

Using the Meta Quest 2 headset to teleoperate two SO101 follower arms, enabling the collection of precise robot data essential for training the advanced GR00t N1 model.

Prosthetic ARM

1st place in KSIT, India Hackathon

The 3D-printed prosthetic arm incorporated servo motors for finger movement, controlled by EMG sensors. These sensors detect muscle movements on your arms and legs, allowing the prosthetic arm to respond accordingly.

LeRobot Playing Jenga using ACT Policy

Trained ACT Policy with tele-operated robot data to play jenga

Trained an advanced VLA model by leveraging extensive and detailed tele-operated robot data, and successfully taught it how to skillfully master the complex game of Jenga with precision and strategic finesse.

Tele-operated Turtlebot 2

Brought an old turtlebot 2 back to life.

Kobuki base with Astra Pro Depth Camera controlled using ROS2 to teleoperate the robot. Uses Depth camera for SLAM and point cloud mapping.

Prosthetic ARM

1st place in KSIT, India Hackathon

The 3D-printed prosthetic arm incorporated servo motors for finger movement, controlled by EMG sensors. These sensors detect muscle movements on your arms and legs, allowing the prosthetic arm to respond accordingly.

Eye Gaze, Hand Tracking and SLAM for Physical AI

Performed Eye Gaze, Hand Tracking and SLAM on egocentric videos

Used Meta Aria glasses to record ego centric human demonstration videos of ADL and perform Eye gazing prediction, hand tracking and SLAM. This video can be used in few shots learning foundation models like Vision Language Action Models (VLAs)

Robotic ARM

3D printed Robotic ARM

Created was a 6-degree-of-freedom robotic arm fabricated through 3D printing. Its operation was managed via ROS Moveit alongside a Leap Motion camera. The terminal component consisted of a soft robotics based gripper.

YOLO Football Analysis

Developed a Football Analysis system leveraging YOLO and OpenCV. YOLO is utilized for real-time object detection, identifying players, referees, and the ball within video footage. We enhance player identification by employing Kmeans for pixel segmentation and clustering, enabling team assignment based on jersey colors. This facilitates the calculation of a team's ball possession percentage during a match. Optical flow analysis tracks camera movement across frames, facilitating precise player movement measurement. Perspective transformation is applied to convey scene depth and perspective, enabling movement measurement in meters rather than pixels. Finally, player speed and distance covered are computed to provide comprehensive analysis.

Animatronics Eye Mechanism

This project involved building an animatronic eye mechanism, inspired by an

open-source project. The whole design was 3D printed, and all the electronics were

assembled. The project is utilizing an AI-based face shadowing model to give the eyes

the most realistic movements. While still in progress, the project shows promising

results in the field of robotics, with potential applications in fields such as filmmaking,

animatronics, and prosthetics.

Mixed Reality Headset

3D printed mixed reality headset

Motivated by Project Northstar, I created a personalized mixed-reality headset using 3D printing, equipped with a Leap Motion mount for hand tracking. I also built an MR demo in Unity 3D that includes hand-tracking functionality.

Air Quality Index Detector

Arduino based 3D printed Air quality index detector. Sensors like MQ-135, MQ-7 and PM 2.5 are used to detect harmful gases like ammonia, alcohol, benzene, smoke, carbon dioxide, carbon monoxide, and dust particles. OLED displays the realtime Air Quality Index of the surrounding and streams the data to the internet using MQTT protocol.

Bipedal Walking Robot

Trained the Bipedal Walker using stable-baseline3 with the Proximal Policy Optimization (PPO) algorithm. Uses OpenAI gymnasium Box2D environment.

Imagine a world without caregivers—millions of disabled seniors rely on trusted companions, yet there aren’t enough. Our AI learns from caregivers via smart glasses, guiding substitutes in real time.

Our AI caregiving assistant is trained on videos of primary caregivers performing activities of daily living (ADLs) tailored to each patient's needs. In the absence of a primary caregiver, the AI can guide substitute caregivers with clear, step-by-step instructions specific to the patient. The AI monitors each action and, if a caregiver deviates from the patient’s preferred method, it provides corrections to ensure proper care.

Nebula

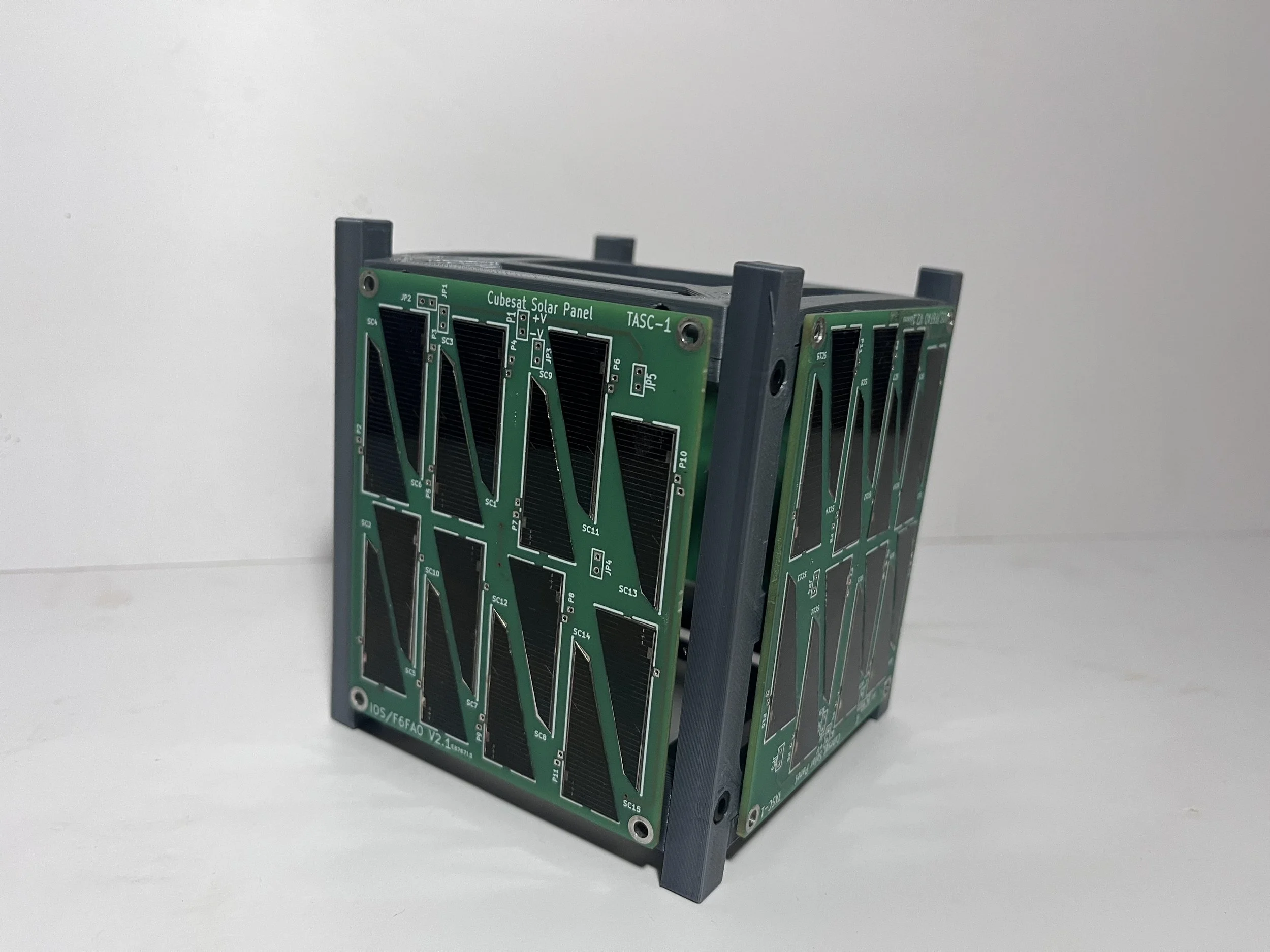

1U CubeSat launched to near-space

I constructed a CubeSat sent to the Stratosphere, designed for detecting muon particles. The CubeSat was retrieved successfully, allowing us to collect data and conduct subsequent analysis.

Humanoid Head

3D printed an humanoid head. The designs are from an open source project called Inmoov. The humanoid head has 10 servos that can be controlled using a python based custom designed GUI software. The robot can do gaze prediction and follow a user’s face.

ACHIEVEMENTS

TEDx Speaker

Talking about how AI is going to revolutionize the future

25+ Hackathon Wins

won close to 28 national and international hackathons like Carnegie Mellon, Georgia Tech, San Francisco State, NITK, Bangalore, and many more..

Raised $30,000

Converted a hackathon project into a startup and won $30,000 in various pitch competitions.

Media

Young entrepreneurs aim to contribute to global development with their tech innovation

The founders, Surabhi and Mahanthesh, met during their undergraduate degree. With a mixed combination of interests and a similar goal of becoming entrepreneurs, they began their journey of exploring each other’s skillsets and what they can bring to the table. The defining moment for them was what led them to their company Quantanx and its breakthrough product. Read More

Where the right technologies are delivered for exponential growth…

Mahanthesh was always fascinated by technology since his early childhood. He used to observe the tech tools around him with keen interest and decided to dive more deeply into the tech space. Later, intrigued by electronics engineering, he decided to forge ahead in this direction where Robotics attracted him the most. Gaining absolute mastery over the software part of engineering, Robotics allowed him to comprehend how to integrate the appropriate solution when it comes to dealing with the real-time issue through his in-depth knowledge of the hardware and the software aspect of the tech space. Read More

UPES Organises 24-hour Long Hackathon; Participants From 11 States

Team ‘The Hack Squad’ T296 from SIB Institute, Karnataka, won the 1st position by creating a 3D model to create an extended version of virtual reality and give realistic feedback to the user, winning money prize of Rs.30,000, trophy and medals, among other goodies. Read More